Computers Art & Design

Computer Art here relates to the early development of artworks generated on computers mainly by programming, in other words algorithmic art. We also touch on artworks generated by computers using artificial intelligence (AI) approaches. AI approaches have also been used to try and produce a critical evaluation of art works based on some guide lines.In the following we give a very brief survey of computers in art focussing on early years and also on AI art.

This field is evolving very rapidly and we suggest a look at the Lintz (Austria) based Ars Electronica platform for art, technology and society.

In a broad perspective algorithmic art (or generative art), viewed as relying on execution of a design based on some set of rules, be it in architectural, decorative or in other domains goes back into ancient history. The rules could be related to the movement of the sun and planets or correspond to other considerations embodied in use of, frequently, geometric forms as for instance on the ancient Indus valley pottery of the MohenjoDaro/Harappa period (2000 BC), the Hindu Sri Yantra symbol, the geometric patterns in African tribal art, the Aztec Calendar Stone, the Egyptian pyramids or in Islamic art in more recent times. Figure: From left to right: Sri Yantra from Sringeri SharadaTemple (India), vase from Harappa, Monolith of the Stone of the Sun, tiles from the of the Great mosque of Kairouan (Tunisia),and screen shot from the Atari Mozart musical dice program.

Figure: From left to right: Sri Yantra from Sringeri SharadaTemple (India), vase from Harappa, Monolith of the Stone of the Sun, tiles from the of the Great mosque of Kairouan (Tunisia),and screen shot from the Atari Mozart musical dice program.

In the old days the patterns in a design were laid out by hand, following some symmetry considerations or a geometrical pattern. Within this context one can mention the European Renaissance painter’s use of perspective in “costruzzione legittima”, Leonardo da Vinci’s geometrical construction of shadows, Albrecht Dürer’s“Four Books on Measurement”, with discussions on the use of geometric constructions in various designs. The 18th century musikalisches Würfelspiel or “musical game of dice”, which can qualify for the term generative music, is a work attributed to W. Mozart (K6.516f) in which 16 bars of music from 176 (and 16 from 96 trios) are randomly (aleatorily) combined using dice throws and a look up table to produce a large number of unique waltzes (in 1991 Chris Earnshaw wrote a public domain implementation for the Atari computer).

We look here briefly at how computers entered into the realm of music, literature, visual arts and design, where they now play an important role.

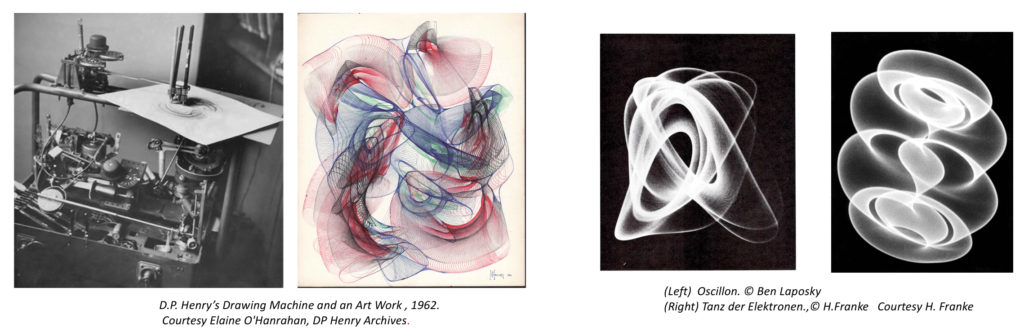

Pre digital computer, machine generated artworks (related also to interest in movement in art and its use of moving objects- kinetic art), relied on use of mechanical devices e.g. spinning disks, harmonographor, various pendula etc. where the artists could record trace shapes on paper or video and also introduce random perturbations in the harmonic movement. John & James Whitney brothers (USA), developed innovative practices in animation. John Whitney used pendula in combination with a light source and slit to record movement traces, as equivalent to vibration, on the soundtrack area of a film and thus produced early electronic music. In the late 1950s early 1960s James Whitney, adapted the targeting mechanism of an anti-aircraft analog computer gun to allow filming geometric shapes, which could be moved in a programmed manner (they called it: “incremental drift”). This evolved into motion control photography. James Whitney’s film Lapis was shot with this machine, where handmade artworks of dot patterns were moved.

Desmond Paul Henry (UK) in the early 1960’s constructed electronically driven drawing machines based around the mechanics of World War II analogue bomb-sight computers. The drawing machines could not be pre-programmed or store information as in a conventional computer and the output was not reproducible. Henry had a limited control allowing him to spontaneously intervene in the course of image production. In the 1950s Ben Laposky (USA) and Herbert Franke (Germany) created electronic art works by combining diverse types of electrical waveforms at the inputs of an oscilloscope and photographing the resultant images that appeared on the fluorescent screen. Laposky called these “electronic abstractions” and “Oscillons”. The image shows Franke’s 1961 “Tanz der Elektronen/ Dance of the Electrons”. In 1962 he produced an animated sequence with the same title (see Franke’s You Tube channel).

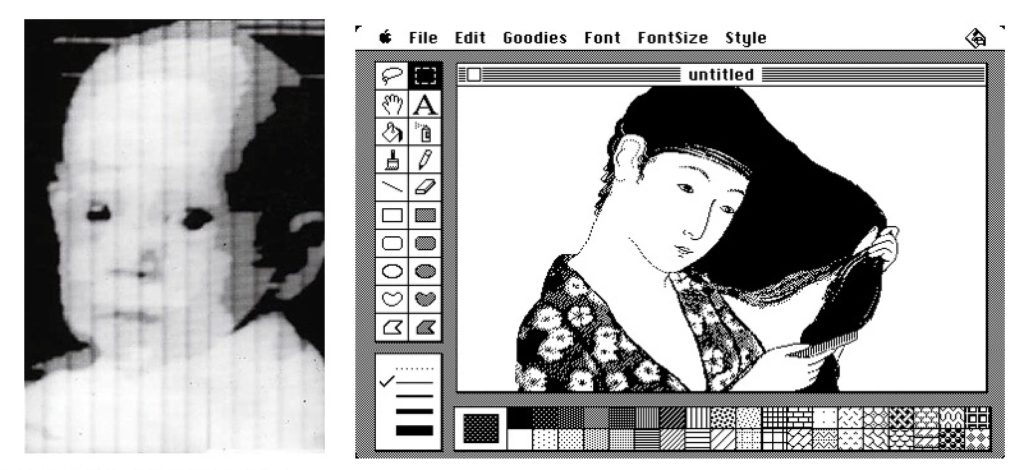

One needs to realize that first design and art works, were made on large, not easily accessible “mainframe” computers. These were slow and had poor input/output capabilities (see computer section). Initially visualization was restricted to the cathode ray screens and input/output handled by punched cards or tape. In the 1950s XY and strip-chart recorders appeared allowing printing the art works. In a number of cases the result of programming of an art work was only seen without any preview, after plotting. Today it may seem obvious that you can transfer an image into computer memory. This became possible in the late 1950s. Possibly the first image to be scanned into a computer is by Robert Kirsch, who designed a rotating drum scanner(1957) with the SEAC team. He digitized an image of his son, Walden. Image above: (left) Robert Kirsch’s scan of his son Walden’s photograph (Courtesy Computer History Museum) and (right) Apple MacDRAW, with Susan Kare’s drawing. Kare created many interface elements and fonts for the Mac.

Image above: (left) Robert Kirsch’s scan of his son Walden’s photograph (Courtesy Computer History Museum) and (right) Apple MacDRAW, with Susan Kare’s drawing. Kare created many interface elements and fonts for the Mac.

Early “painting” on computers was done by scientists and engineers, initially using ALGOL 60 or Fortran scientific programming languages. Some graphic extensions to these were written by various people like Georg Nees(Gl,G2,andG3 in ALGOL 60), FriederNake(COMPART, Fortran, around 1963), Leslie Mezei(SPARTA and ARTA, in Fortran, late 1960s), etc.

In 1973 Richard Shoup produced SuperPaint for the Xerox PARC laboratory. In 1981, UK Quantel developed Paintbox, a computer-graphic program that allowed manipulating video images digitally. The first widespread easily usable user-friendly painting program appeared only in 1984 on the Apple Macintosh thanks to its GUI (monochrome): MacDraw, along with QuickDraw and MacWrite. Adobe Illustrator 1.0 appeared on the Mac in 1986 and Adobe Photoshop (developed around 1988 by Thomas Knoll) in 1991.

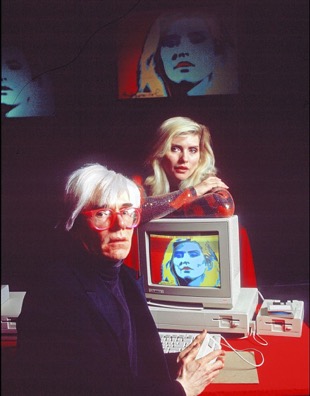

A year after the Mac, the Atari ST appeared with its monochrome GEM GUI and the Commodore AMIGA 1000 was launched with a colour GUI. The Amiga launch became famous became famous because of the partcipation of Andy Warhol who drew a portrait during the event (image above). A number of other software has been launched since, including Microsoft Paintbrush, Corel Painter, ArtRage, GIMP, Krita, OpenCanvas etc.

1960-61 philosopher Max Bense with Elisabeth Walther started the publication of rot (red). Bense worked on development of information aesthetics, giving a theoretical fundament for early computer artists. The rot #6 issue included a literary experiment in which a computer randomly generated text.

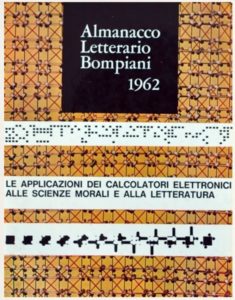

The technically complicated nature of early computer art induced collaborations between artists, scientists and corporations, such as Olivetti in Italy and Bell Laboratories in the USA. A unique collection of texts on computers and “moral sciences and literature” was produced by the Italian Almanacco Letterario Bompiani in 1962, followed soon after by the exhibition Arte programmata. Arte cinetica. Opere moltiplicate. Opera aperta (“Programmed art. Kinetic art. Multiplied works. Open works”), organized by Bruno Munari and Giorgio Soavi, at the Olivetti showroom in Milan. The Almanacco includes “computer poetry” Tape 1 programmed by Nanni Balestrini.

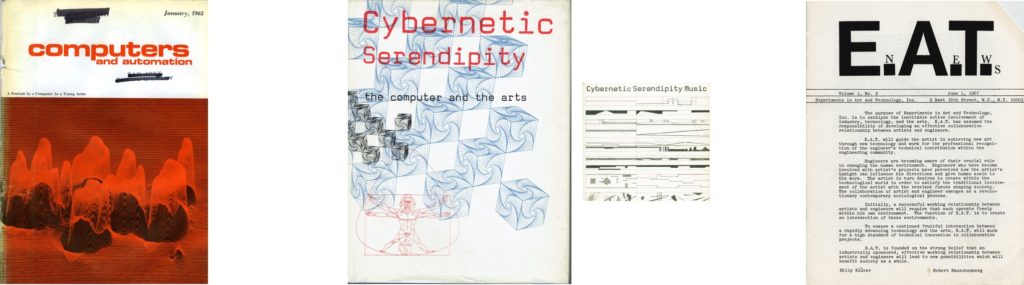

In 1963, the magazine Computers & Automation started showing computer art and established the first computer art contest. In 1965 Bensein in Stuttgart and New York’s Howard Wise Gallery organised the first exhibitions displaying computer art. In 1966, a group of New York artists with engineers and scientists from the Bell Telephone Laboratories created the event “9 Evenings: Theatre and Engineering”. In 1967, engineers Billy Klüver and Fred Waldhauer, and artists Robert Rauschenberg and Robert Whitman launched the organization Experiments in Art and Technology (E.A.T.) to stimulate collaboration between artists and engineers. E.A.T organised several important events in the next few years, including the “Some More Beginnings” in 1968. Computer Technique Group (CTG) emerged in Japan (1966 -1969). Artists in this group collaborated with Japan IBM.

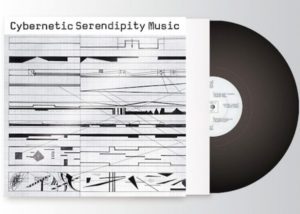

In 1968, the Cybernetic Serendipity exhibition at the Institute of Contemporary Arts London, was organised by Jasia Reichardt, which featured computer art in the context of contemporary art. The disc shown in the illustration includes some of the first pieces of computer music including the “Illiac suit”: one of the first computer musical compositons. Other events at the time include “Tendencija4, Computers and Visual Research” in Zagreb and “On the Path to Computer Art” in Berlin. Computer Arts Society (C.A.S.) was born in London starting with an inaugural exhibition “Event one” in 1969. C.A.S. was setup as a branch of the British Computer Society to facilitate the use of computers by artists. It produced the bulletin PAGE. The journal Leonardo was founded in 1968 in Paris (later transferred to the USA): an international peer-reviewed research journal that features articles written by artists and focuses on the interactions between the contemporary arts, sciences and new technologies. In 1969 Andy Van Dam, founded the Association for Computer Machinery Special Interest Group on Computer Graphics (SIGGRAPH), which became one of the most influential groups in computing. Starting in 1974, the now famous annual SIGGRAPH conference was launched.

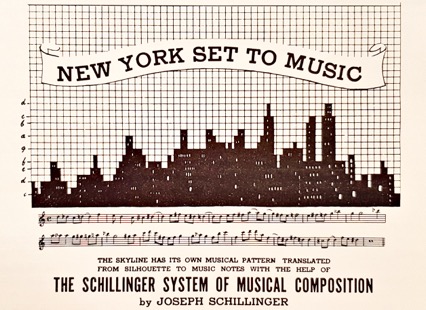

In the late 1940s Joseph Schillinger gave a mathematical analysis of musical styles (“The Schillinger System of Musical Composition”), relevant to computer music and also Karlheinz Stckhausen, explored aleatory methods of music composition.

Schillinger book poster. New York Skyline was composed as a piano piece by Heitor Villa-Lobos.

First steps in music by computers started with Alan Turing monitoring the Mark I computer’s (1948-1950) functioning, by connecting computer internal connections to a loudspeaker, generating “melody like” sound and in 1951, with specific musical intent, Christopher Strachey’s programming the Mark II. Similar work was done on the Australian CSIR Mk1. Lejaren Hiller and Leonard Isaacson composed the Iliiac Suite for string quartet in 1956 (Illiac computer, University of Illinois). In 1957 Max Mathews (Bell Labs) wrote the MUSIC program: the first to use acoustic waveforms and a 17-second piece was performed on an IBM 704 computer. The MUSIC-N series followed, that had a library of functions built around simple signal-processing and synthesis routines. Amongst other early work is that of Iannis Xenakis: the “Stochastic Music Program”.

John Chowning invented the FM Synthesis algorithm in 1967, which was used later e.g. on Yamaha chips. Digital synthesizers took off in the late 1970s, with a multitude of different interfaces. In 1983, the Musical Instrument Digital Interface (MIDI) was established and popularised by systematic inclusion on personal computers like the Atari ST.

A unique disc of early computer music, including the Illiac suit, was produced as part of the Cybernetic Serendipity Event (see previous section). It has music both composed and performed by a computer and also has pieces by John Cage and Iannis Xenakis. You can watch a short film about it on YouTube (click on the image).

The original Vinyls are hard to find but it has now been produced by Vinyl Factory and you can read more about this classic on their website .

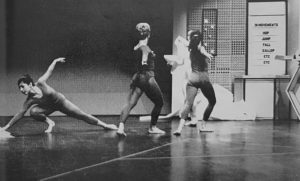

Possibly the first use of computers in dance is by Jeanne H. Beaman in collaboration with Paul le Vasseur (University of Pittsburg, Computer Center) in 1964. They started with an aleatorial approach, by giving three lists of tempo, movement and direction command for a solo dance to a computer which selected these at random. This was then extended to duets and larger groups, with the computer randomly selecting also starting points for dancers on the dance floor. In England Ann Hutchinson similarly used the computer in 1968. Choreographer Merce Cunningham, who collaborated with John Cage, had been interested in such an aleatorial approach to dance since the 1950s.

J.H.Beaman dancers, with a command screen, instructing them how to move. From: Cybernetic Serendipity

In 1966 Michael Noll of Bell Labs proposed to use a computer to plan a complete choreography and made a 3’ film, with stick figures representing dancers. (Dance Magazine, 1967). The idea of using the computer was evoked up by M.Cunningham in Changes/Notes on Choreography, in 1968: “ I think a possible direction now would be to make an electronic notation… that is three dimensional… it can be stick figured or whatever, but they move in space so you can see the details of the dance; and you can stop it or slow it down… [it] would indicate where in space each person is, the shape of the movement, its timing”. Cunningham went to the extent of envisaging details like inclusion of facial expressions or finger movements. He pointed out also, that the computer was not just a tool, but could “reveal” unexpected possibilities.

Michael Noll’s dancer stick figure animation on a computer screen.

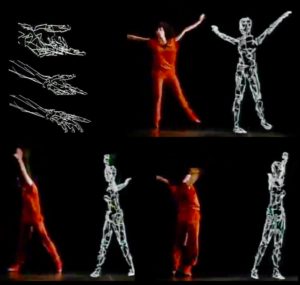

Choreographers thus suggested using computers to compose and edit dance notation scores and then to translate these scores into animation. The computer would both record the dance and assist the choreographer in visualizing and experimenting with movement during the creative process. Computer animated figures have been integrated into a real life dance performance, as shown in the The Catherine Wheel example here, in what is possibly the first realistic animation by Rebecca Allen, who started working on computerised animation in the late seventies and showed first animation work from 1982.

A 1983 sequence of a dancer figure in Rebecca Allen’s (New York Institute of Technology’s Computer Graphics Laboratory) animation for Twyla Tharp choreography of The Catherine Wheel. This animation is projected along with dancers during the dance. Source: YouTube video. Courtesy Rebecca Allen.

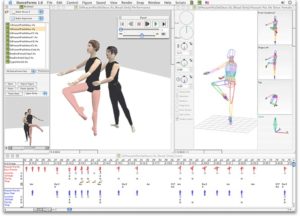

Approaches to describe in detail dancer body movements or dance notation have been proposed in Western dance sine the 17thcentury. Today Laban Kinetography (Labanotation) and Benesh notations are popular. Since the mid seventies several groups, involving choreographers and computer scientists, worked to develop an interactive graphics editor for dance notation, at a time when graphical user interfaces were inexistant. An early example was Choreo(1977) from the University of Waterloo. Development of Life Formsby Thomas Calvert’s group was started in 1986 for the Apple Mac and Silicon Graphics Computers. It was used by Cunnigham since 1990 and tweaked according to recommendations of Cunningham and others. Commercial Computer choreography packages appeared starting with Life Forms, now DanceForms by Credo interactive. Others appeared: Calaban, LabanReader (free software), Labanatory, Labanotation, MacBenesh etc.

Screen image of DanceForms, 2018. ©Credo Interactive.

These allow the choreographer to try out ideas and design dance sequences before even meeting the dancers. They allow to select a figure (or several figures), and provide controls for movement of limbs and limb segments. The choreographer defines some frames i.e. dancer posture and positions. The computer then builds the intermediate frames for the movement for the dancers in between the ones defined by the choreographer.

Dancer Robert Wechsler, wearing EKG electrodes used to generate muscle-controlled music and lights, with computer engineer Frieder Weiss. Photo: Bernd Tell. Courtesy Robert Wechsler.

Evolution of technology allowed progressively greater interactivity, by e.g., monitoring the dancers limb movements or cardio activity as in the image shown here, and translating it into sound during the dance. Developments in hardware and software for music composition, sound generation, choreography, theatre lighting, and the visual arts is facilitating an increasing integration of these independent media.

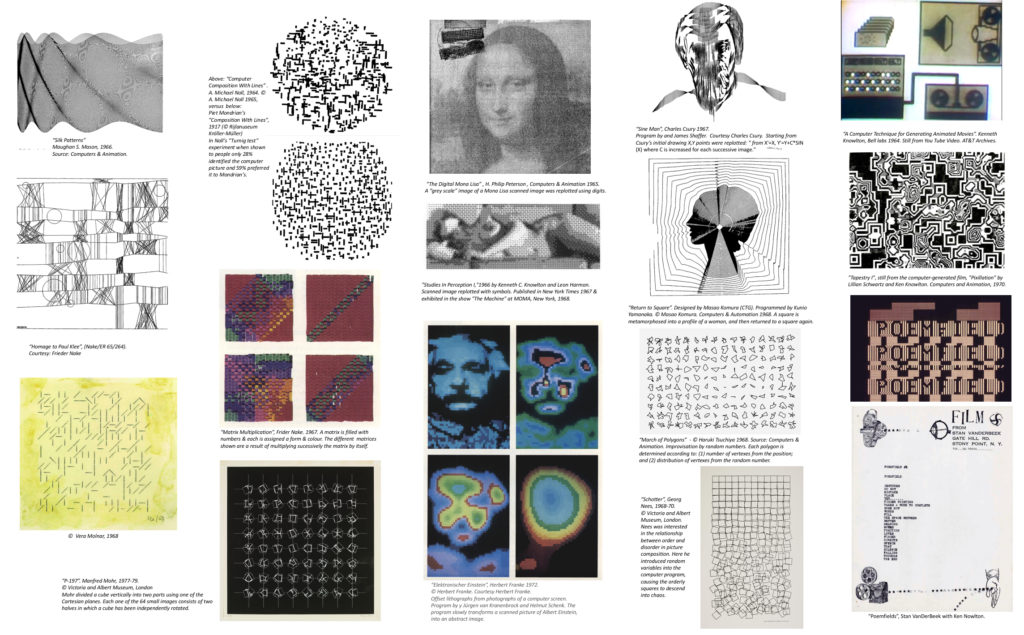

This page shows some works of the digital art pioneers, whose work started appearing publicly on the pages of the Computer and Automation magazine from January 1963. The magazine started a computer art competition and a number of now well known people in digital art presented their work in it: Charles Csuri, FriderNake, Manfred Mohr, Lesly Mezei, Georg Nees, Lilian Schwarz, Ken Knowlton and others.

Their works use different approaches: representation of mathematical functions or their combinations; representational imaging (images scanned into a computer and then printed using shades of grey with characters, or manipulated in some way); combinatorial representation (creating images using several objects combined in different manner) or else use of randomness or chaos to introduce unpredictability. (Click on image for large version).

You can find an extensive catalogue of digital art at e.g.- dada.compart-bremen.de or Digital Art Museum. A compliantion of interesting texts by some of them may be found in the book: “Artist & Computer“.

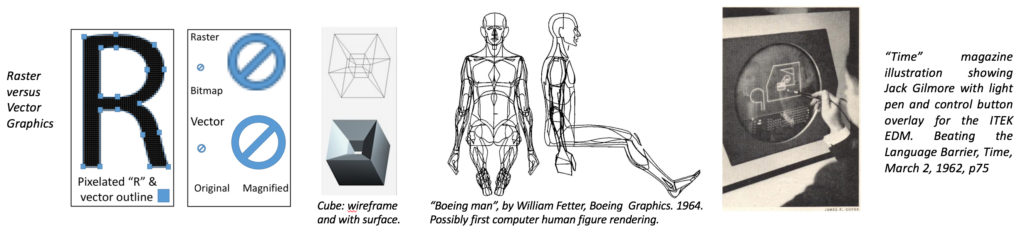

Computer graphics. There are two different approaches to generate an image: raster and vector graphics. In raster graphics the image is generated point by point: pixel by pixel. In the computer every point in the picture is stored independently in a file and usually the value is representative of colour (it would be 0 or 1 for black and white). The file could then have a very large size for a well defined image. An alternative to this is vector graphics in which a picture is built up of vectors: lines connecting points; in practice various primitive mathematically definable shapes (lines, circles, triangles etc). The image is then memorized in terms of a set of these shapes. Thus in vector graphics a straight line is determined by its extreme points only, while in raster graphics you need to define all the points constituting the line. The former is more economical in terms of memory and more easily scalable.

In case of a three dimensional (3D) object, where you need to describe its shape and surface, in traditional design, as this was done for a car body, you would need to construct a skeleton, following predefined drawings and cover it with a “skin”: a kind of clay that would be molded and refined to meet a designer’s requirement. In a computer based design this procedure corresponds to buildingup a wireframe model, composed of interconnected (rigged) parts and applying a skin or texture along with using various lighting effects to render it realistically. A step beyond this is solid modelling. This is now frequently done by a combination of Constructive Solid Geometry (CSG) methods, which are based on the use of solid primitives (cylinder, sphere, cone,…) as building blocks from which the model is constructed and Boundary Representation (B-Rep) methods to define the bounding surfaces of a solid object, which are results of various spatial transformations of 2D profile curves. Solid modelling allows more complete description of the objects to be built.

Another feature of modern CAD systems is the ability to create parametric models, in which every entity in the solid model has parameters associated with it, which control the various geometric properties and locations of these entities within the model. In some cases models keep track of the history of evolution of the model, which allows updating and regenerating it. Some parametric modelers also allow constraintto be added to the models. These can be used to construct relationships between parameters.

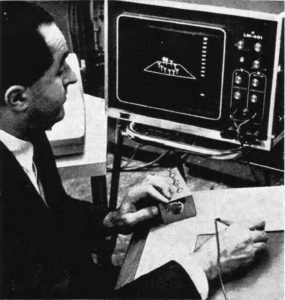

Brief glance at CAD development. On the technical, machining and design side, industrial process involved elements of numerical control. CAD programs started to be developed in automotive and aerospace industries and universities in the US and Europe. The first programs appear to be the numerical control machining program by Patrick Hanratty, PRONTO (Program for Numerical Tooling Operations) at General Electric, followed by general purpose CAD program Design Automated by Computer, DAC-1 released in 1961 by GM and IBM with Hanratty’s involvement. An important development was the appearance of light pen input interactive systems. Light pens were developed about 1952 to select points on the video display of MIT’s Whirlwind computer and then used on the US Defense SAGE system. Around 1959 a project called the Electronic Drafting Machine or EDM was initiated at ITEK and in 1962 they started marketing a simple light pen input drafting system using geometrical shapes. In 1963 Ivan Sutherland published his light pen based Sketchpad program, which was part of his MIT PhD thesis. These innovations established the basis for graphical interfaces. In 1971 the Automated Drafting And Machining (ADAM) program was introduced by Hanratty’s company, Manufacturing and Consulting Services (MCS), which provided the software for companies like McDonnell Douglas and Computervision.

Vector graphics in CAD is based on developments by a number of mathematicians and in particular in France by Paul de Casteljeau (1958, at Citroen) and Pierre Bézier (at Renault), who formulated a means of drawing free-form curves and surfaces, named Bézier curves and surfaces. In the mid 1960s work on this aspect was also done by Steven Coons at MIT. Bezier’s work led to Renault’s UNISURF program. This work eventually evolved into Dassault Systèmes’s CATIA. The British Cambridge CAD group made substantial contribution to solid modelling starting from mid 1960s. Milestones in 3D CAD development include the formulation of NURBS (Non-uniform Rational B-Splines, PhD thesis, 1975) by Ken Versprille, which underlies much of modern 3D curve and surface modelling. Amongst other developments in the late 1970s one can note the release of PADL (Part & Assembly Description Language), by the the Rochester University group of Herb Voelcker. An excellent description of CAD development may be found in the “The Engineering Design Revolution” book by David Weisberg available online.

In 1969 MAGI released the first commercial modelling program: SynthaVision. The CAD/CAM world progressed rapidly in the 1970s with progress in hardware and software, appearing on UNIX workstations. Appearance of personal computers popularised CAD starting with the well known AutoCAD program produced for IBM PCs by Autodesk in 1983. Auto CAD was mainly 2D. Three dimensional graphics programs were developed in several companies. In 1987 Pro/ENGINEER(now PTC Creo), by Russian emigré to the US, Samuel Geisberg, was released by PTC (Parametric Technology Corporation): a CAD program based on solid geometry and feature-based parametric techniques. It ran on UNIX workstations since PCs were not powerful enough. In later years several 3D modeling programs, like ACIS and Parasolids from the CAD group, were released. In the 1990s, the PCs became powerful enough for 3D CAD. In 1995, SolidWorks for Windows was released followed by Solid Edge, Inventor, and others.

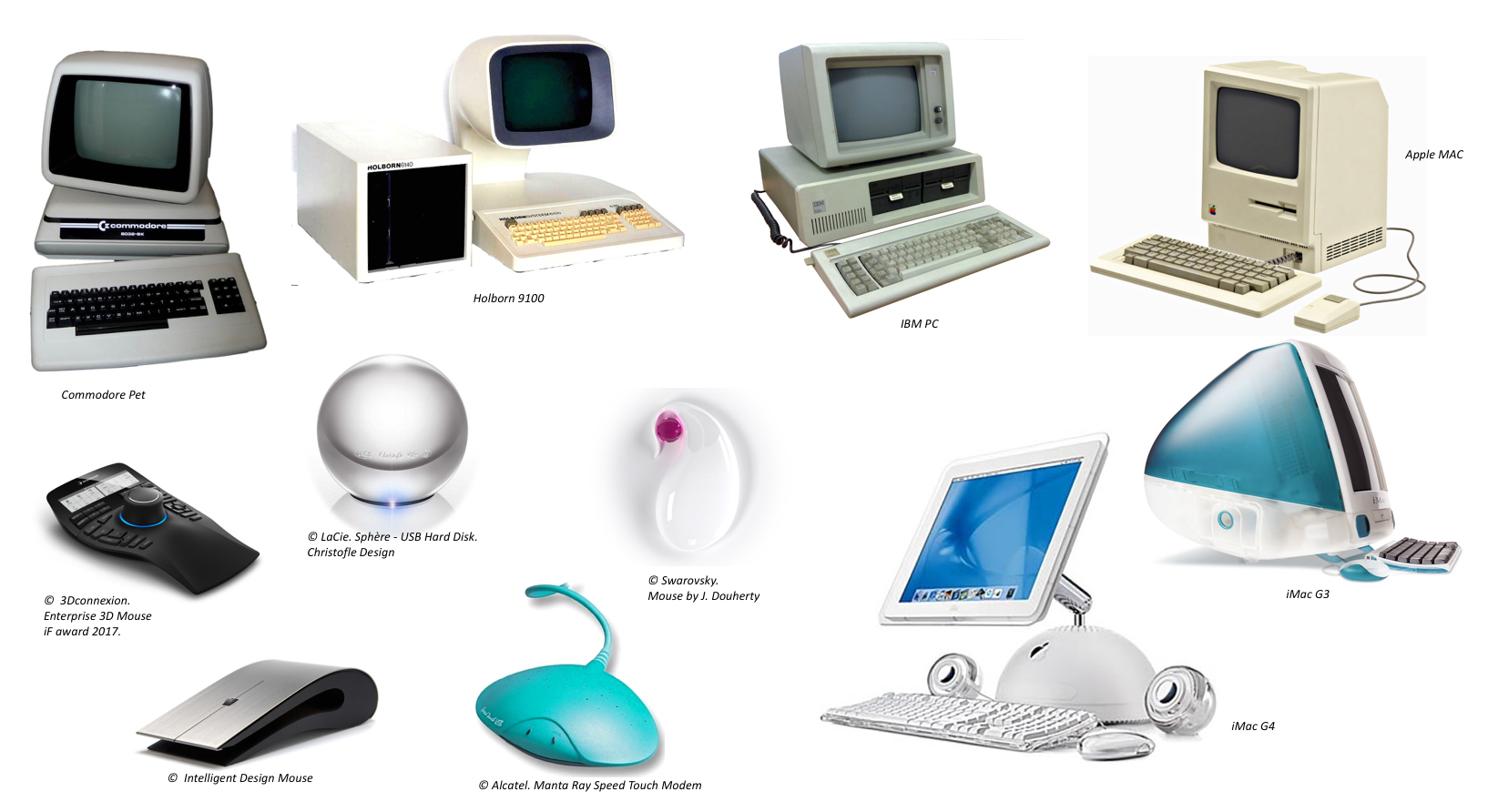

Since ancient times humans strove to make objects that were pleasing to the eye and, whether of complex or simple construction, their external design followed the esthetics of the time and place where they were created. Calculating machines are no exception. This page shows some examples: from the beautiful 18th century old mechanical calculator by Anton Braun made for the House of Habsburgs, to the very simple elegant modern designs.

18th century old mechanical calculatorsby Anton Braun (© DeutschesMuseum, Munich and © TechnischenMuseum, Wien)

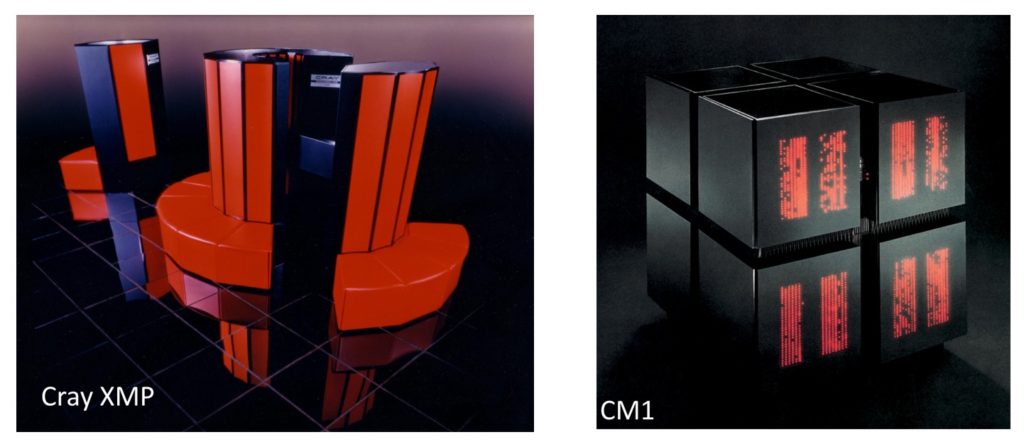

We look at some examples of design from handheld and desktop calculators to supercomputers, some of which have made their way into Modern Art Museums. In this respect one can note the elegant design of the early products of a company that favored design as much as functionality: Olivetti, and its calculators designed by Mario Bellini and others, as well as the elegantly simple designs of Dieter Rams and other iconic designers who paved the way to these developments. One can think here a lot in terms of form versus content and in this respect it is interesting to read a designer’s point of view: e.g. Dieter Rams “ten commandments” on design (www.vitsoe.org) or the text by engineer-designer-artist TamikoThiel from the time she was designing the supercomputer known as the Connection Machine: http://tamikothiel.com/cm/. It is unfortunately hard to pay due tribute to them all in this limited space.

In the realm of supercomputers the very distinctive “C” shape of the Cray-1, by SeymoorCray was made with some esthetic design consideration, besides pure functionality. Shown here is the somewhat similar Cray XMP. TamikoThiel’s design of the Connection Machine computer’s elegant black box using the twinkling light panels reflecting the machine’s inner operation, earned it being shown at MOMA, New York 2017. Thiel’s design is said to have influenced Steve Jobs’ NeXT cube computer design. Now many supercomputer vendors make it a point to provide their machines an elegant dress.

Images:© Cray Research and courtesy TamikoThiel, © Thinking Machines Corporation, 1986. Photo: Steve Grohe.

The evolution of the personal computer’s design from the homebrew kit (see computer history) to the boring off-white/beige box (with similar accompanying peripherals), to the varied, elegant, sometime playful designs of today owes much to Steve Jobs’ Apple computer’s design team led by Jonathan Ivy. It should be said that Olivetti’s design mantras affected Apple. Apple’s design strategy has affected the way other companies now design computers and peripherals. In the images below one can see the evolution of the hobbyists homebrew computer box to the rounded shape of an early Commodore Pet computer. In 1981 a Dutch company: Holborn, had produced their very interesting looking 9100 computer designed by the Dutch industrial design studio Vos. As opposed to this the IBM PC which appeared the same year was quite unrevolutional.

The appearance of the Apple Mac, derived from Lisa, in 1984, showed one could design a PC differently. It popularized the use of a user friendly GUI interface. After some ups and downs, in 1998 Apple came out with Ivy’s translucent blue iMac, which definitely turned its back on the IBM PC style. Different candy colored versions followed closing the door to the beige box, which however seems hard to kill, and is now sometimes black! Since then Apple has turned out a series of cute designs like the “iLamp” iMac G4, followed by its other characteristic products like the iPod and iPhone. Other companies try to follow this design strategy. Here we just show some recent cool looking peripherals.

How Artificial Intelligence approaches affect the Arts.

A long time ago the British mathematician Alan Turing had said (The Times, 1949): “I do not see why it (the machine) should not enter any one of the fields normally covered by the human intellect, and eventually compete on equal terms. I do not think you even draw the line about sonnets, though the comparison is perhaps a little bit unfair because a sonnet written by a machine will be better appreciated by another machine.”

Much progress has occurred since then and AI is penetrating more and more our lives. Recent predictions of the South Korean Employment Information Agency for 2030 suggest strongly that machines will soon replace humans in a number of areas, except where creativity and negotiating powers are important. IBM’s Watson computer’s win in the Jeopardy game (2011), demonstrated its importance in applications requiring extensive data set consultations and viability as, at least, a consultant in many areas. Clearly this indicates a significant loss of jobs and a necessity to reconsider seriously our education systems so that they focus on fostering creativity instead of learning by heart. But how are machines doing in creative professions today? We look briefly at some examples of experiments that are underway across the world from literature, language evolution, music and painting. You can find many other examples of paintings generated using AI approaches e.g. on the RobotArt.org contest website and in the list at the end of this section (by far not complete, sorry).

The AI artist can produce great art works, but some complain, that these lack the emotional “human touch”… though there are attempts at introducing this as well into the AI framework. We comment more on AI artworks in the next paragraph. Note that whatever one may think about this, clearly these AI approaches will be highly useful in areas such as art restoration and authentication.

A recent sale of an “AI artist” work at a Christie’s auction (not really the first sale of an AI work), for a price higher than an Andy Warholl and Roy Lichtenstein work, signals an intrusion into the world art market of “non human” AI generated artworks. Is this really true? Some would like to say that the computer generates an artwork autonomously. However it is still the fruit of considerable initial human input. The frequently used generative adversarial networks (GANs) are designed by humans and “trained” using data sets (reference art works) chosen by the human trainer-designer. Some people use existing libraries of art works, (public domain or not) some use their own works in which case the output depends on that specific human artist. In the case of an “original creative work” (as opposed to simple copy) whilst the output can be unpredictable and is the result of multiple tests and incremental improvements through trial and error, it is still guided by some preprogrammed criterea. In all these respects the works we see are a result of a human artist using AI tools. But then, someone will say: can the output of a GAN not be also likened to a human who is influenced by art works (or the surrounding world) and training received?

Robots evolve their own language. Sony Computer Science Laboratories conducts research on language evolution and music. An interesting experiment was recently performed on evolution of language between robots by the group of Luc Steels in collaboration with Humboldt University (HU) amongst others. Several HU Muon robots were left to explore, independently of each other, the movements they can make with their bodies and attributed words of their invention to them. They were then made to interact with each other. In beginning communication, robot A chooses a word from its lexicon and asks another robot B to perform the corresponding action. Robot B who has no idea what this means, choses an action- guessing randomly what this might be. If its correct, they continue, otherwise the robot A demonstrates the action corresponding to that word. Robot B stores this in its vocabulary. After a week of interaction the robots evolve their own common language and develop more abstract notions like left and right. Humans do not understand this language.

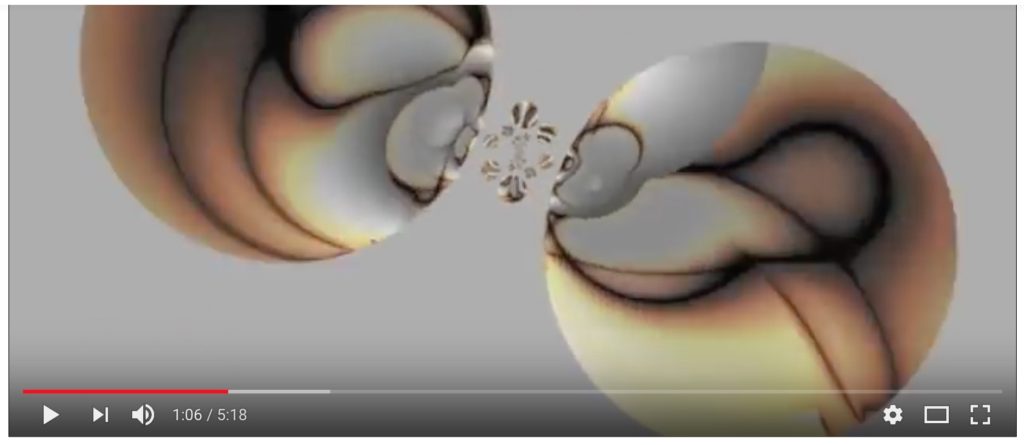

Music: from composer to opera conductor. The Sony laboratory also conducted experiments in which a robot Jazz musician improvised successfully with a group of human musicians. A number of other older experiments exist. Around 1981 David Cope (USA) started his Experiments in Musical Intelligence (Emmy).Emmy evolved into a program to create new output from music stored in a database, using a set of instructions for creating different but highly related replications. With this Emmy produced music in the styles of classical composers: Bach by Design, Virtual Mozart, Virtual Rachmaninoff, … Emmy was then shut down and the newer version Emily Howell was created. Emily Howell produces music on the basis of a musical database including Emmy’s works and those of other composers. It has an interactive interface responding to prompts: musical pieces or voice. The complex method is described in Cope’s books. Listen to Emmy’s Vivaldi improvisation (the images are synthetic).

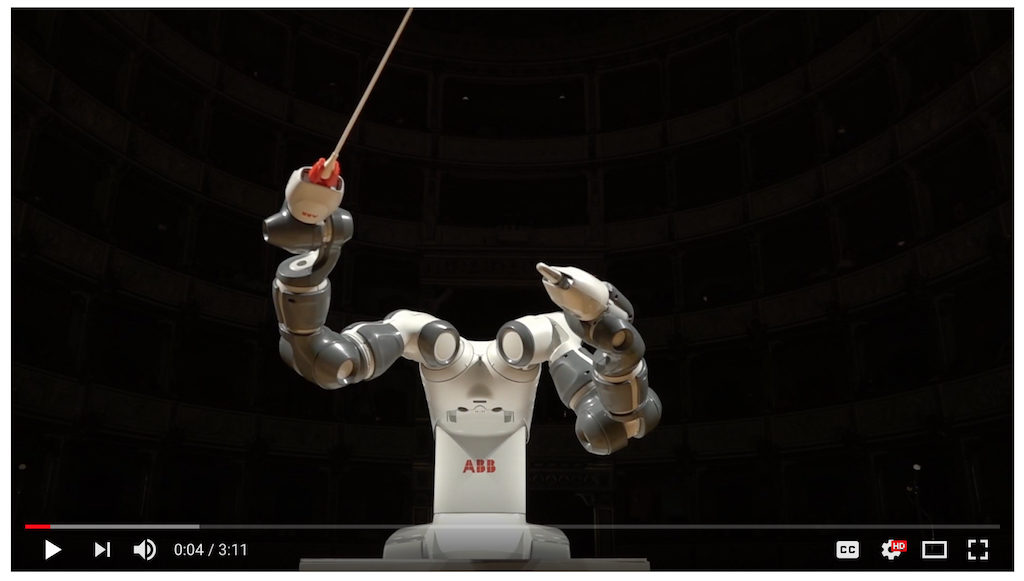

Conducting an orchestra. Several experiments on conducting an orchestra have been undertaken, including one by robot ASIMO. YuMi, another robot with extremely flexible arms designed by ABB robotics, was taught to mimic the Lucca (Italy) Philharmonic Orchestra’s conductor Andrea Colombini’s gestures and conducted the orchestra which performed with singers Andrea Bocelli and Maria LuigiaBorsi. Training YuMi to perform six minutes of music “took 17 hours of work.”

Many experiments on paintings by robots are underway at various institutions and companies including Google, Microsoft etc. There now exists a robot painting international Robot Art Competition that was started by Andrew Conru, where you can find many robotic creations: https://robotart.org. A number of people are now using these AI approaches to produce very interesting works. We show some, hopefully illustrative, examples here.

AARON.

One of the oldest is AARON, by Harold Cohen(USA) started in 1973 and which has continuously evolved since then. AARON learned to situate objects or people in 3D space in the 1980s, and could paint in color from 1990 onwards. It painted on an actual canvas and was taught to mix paint. It had a degree of independence. It has painted completely abstract forms and portraits of human figures without photos or other human input as reference. Some paintings have been made completely independently and in some other cases there was a collaboration: AARON defined the outline & Cohen painted with colors on top of that.

Left: “AARON with Decorative Panel” (1992). Oil on canvas. Below: “Rincon #3” (2006) Pigment ink on panel.. Courtesy Harold Cohen Trust. Photo: Tom MachnikHarold Cohen Trust

e-David: in quest of “style-space”.

e-David (Drawing Apparatus for Vivid Image Display) is a project of the team of Oliver Deussen at Konstanz University. It is somewhat like AARON but the aim is to render realistic paintings and the team hopes that their system will produce artworks that trigger similar aesthetic emotions in the viewer as a human-made drawings or paintings. E-David paints following a picture of a human subject or landscape etc, that acts as its subject. A camera, captures the image of the work in progress and e-David regularly compares it to the subject image and decides where to add the next stroke, adjusting its moves, brush strokes and colour based on what it’s seeing through the camera. E-David can use a variety of styles from pointillistic, ink pen, long or short brushes…

Different styles of e-David paintings. Courtesy Oliver Deussen.

In general Deussen’s method consists in dividing painting into regions and painting translucent layers first with rough strokes and then refined strokes.

The Paintingfool by Simon Colton (UK): the moody software.

The Paintingfool is conceived to have “an emotional response”. It painted portraits during the exhibition, You Can’t Know My Mind at Gallerie Oberkampf in Paris, while reading articles in The Guardian newspaper and performing sentiment analysis, which defined its own mood: very positive, positive, experimental, reflective, negative or very negative ‘mood’. The software refuses to paint if the mood is very negative. Otherwise the software chooses an adjective appropriate for the mood and the subject is asked to adopt a corresponding expression (e.g. smile- if the mood is bright, look sad- if its bleary). It then painted in a chosen style (with reference to the adjective) and the result was analysed by DARCI to see to what extent the portrait conveys the mood: the chosen adjective. DARCI is a visuo-linguistic association (VLA) neural network, by Dan Ventura (Brigham Young University, USA), which has learnt correlations between visual features and semantic (adjectival) concepts.

Images: (left) The dancing salesman problem (No photos harmed exhibition);(middle): Afghanistan collage– Paintingfool downloaded a story on the war in Afghanistan and identified keywords, that it used to find relevant images on Flickr. It then developed a painterly rendition of these images in a collage, juxtaposing a fighter plane with an explosion, a family, an Afghan girl, and a field of war graves. (right) Bleary mood portrait. The Paintingfool. Courtesy Simon Coulton

The Paintingfool also writes poetry and in particular reacts to newspaper feeds creating correspondingly influenced poetry.

The “fake” Rembrandt.

In 2016 a portrait that looks like an actual Rembrandt was unveiled by a group comprised of a team from Delft University of Technology and two Dutch art museums – Mauritshuis and Rembrandthuis. This was the result of a two-year project, entitled “The Next Rembrandt” initiated by Bas Korsten from the J. Walter Thompson advertising agency. A deep learning computer analysis of high-resolution digital scans of 346 images of Rembrandt’s work was performed, including details of faces, their orientation, lighting, and texture of paintings. The program then sketched facial details separately and then put them together. Once the 2D image was complete, a 3D printer was used to add layers to create realistic looking texture. The team believes this method could be used to restore damaged artworks.

The Next Rembrandt”. Courtesy: Bas Korsten.

AI Art at Christie’s Auction.

A significant recent event was the appearance of an AI painting algorithm generated art work from from the French art collective Obvious, at an auction at Christie’s. The painting called “Edmond de Belamy, from La Famille de Belamy” sold for $432,500 more than 40 times the initial sale estimate of $7,000 to $10,000. At at the same time an Andy Warhol print sold for $75000 and a Roy Lichtenstein for $87500. The work was based on the original algorithm by Robbie Barrat (see list below), which was shared with an open source licence.

Edmond de Belamy, from La Famillede Belamy Image courtesy Obvious.

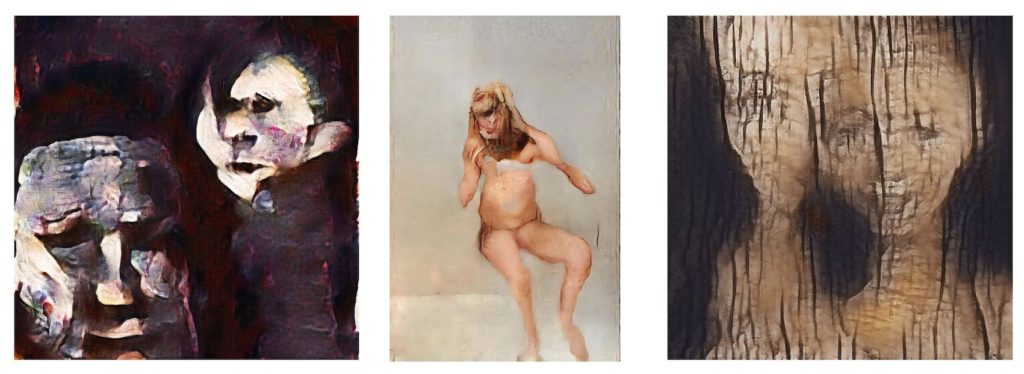

There are now more and more artists (-programmers), who are producing very interesting AI artworks, which even get to auction houses. A list of some engaged in this field is given at the end of this section. We show some here starting with that of Robbie Barrett. First a note: in GANs one network generates images and another one compares with a reference image set given by the artist, and accepts or discards it. Sometimes the GAN’s “go wrong” and are unable to correctly attain the objective set by the artist, i.e. properly imitate the image sets given as reference. The images are then surprisingly interesting, surrealistic, sometimes somewhat of the style of Francis Bacon. In some cases, the human artist on purpose induces errors to attain such effects.

Robbie Barratt (USA).

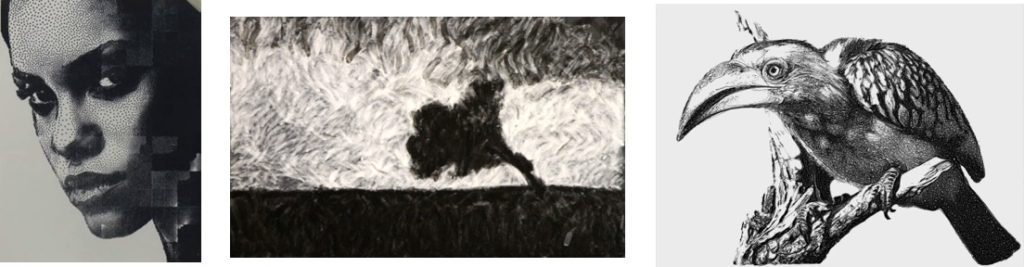

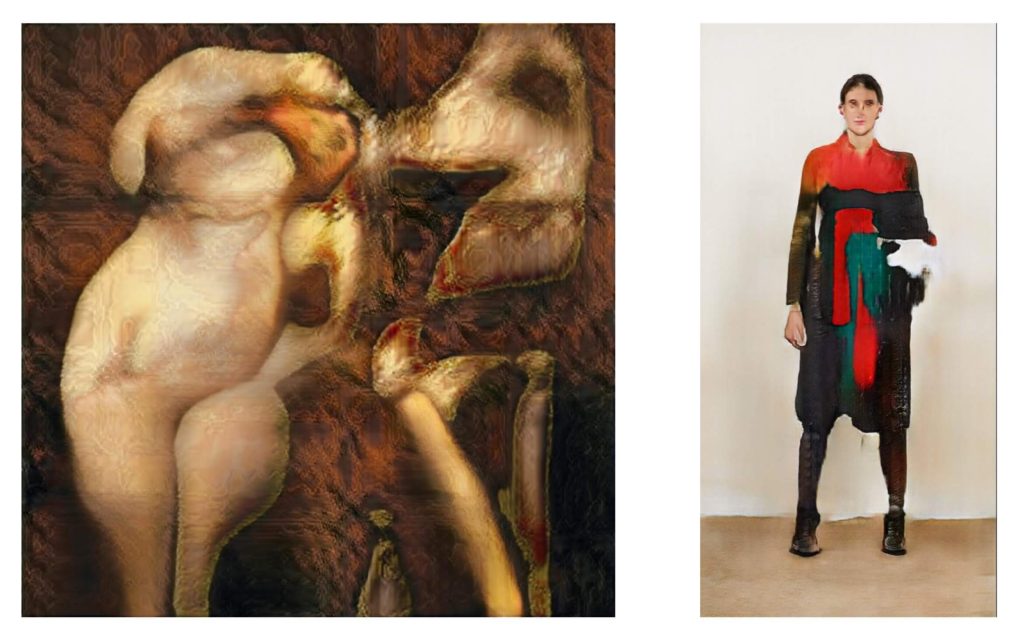

The 19 year old, self taught Robbie is a prolific experimenter in AI art including both music, paintings and even designing clothes. The images here are from the artist ‘s github page. The comments included are from those pages. https://robbiebarrat.github.io

AI Generated Nudes. Using an implementation of Progressive Growing of GANs and a corpus of thousands of nude portraits scraped primarily from WikiArt, a neural network was trained to create nude portraits. …The machine failed to learn all of the proper attributes found in nude portraits and … it generates surreal blobs of flesh.

Neural Network Balenciaga. Could a Fashion designer use AI? Robbie experimented using Balenciaga’s outfits (read more here). In his words: “Using a corpus of Balenciaga runway shows, catalogues, and campaigns, a Pix2PixHD network was trained to reconstruct Balenciaga outfits from Densepose silhouettes. The results are outfits which are novel but at the same time heavily inspired by Balenciaga’s past few years under Demna Gvasalia. The network lacks any contextual awareness of the non-visual functions of clothing (e.g. why people carry bags, whether or not bags are separate from pants, why people prefer symmetrical outfits) – and in turn produces more strange outfits that completely disregard these functions.

Mario Klingemann (Germany).

Very recently one of Mario’s AI works sold at Sotheby’s for a “reasonable” price of 40,000£. He experiments by sometimes, on purpose, inducing “errors” in the output of the GAN , with very bizarre effects.

In his words: “I’m an artist and a skeptic with a curious mind. My preferred tools are neural networks, code and algorithms…… If there is one common denominator it’s my desire to understand, question and subvert the inner workings of systems of any kind. I also have a deep interest in human perception and aesthetic theory.”

The works here are from, http://underdestruction.com/about/(courtesy Mario Klingemann).

Elena Sarin.

Elena is a Russian-American computer programmer and prominent AI artist who uses her own photography or artworks as input data sets to her GANs. Much more of her work may be found here: https://www.flickr.com/photos/tarelki/. Images courtesy Elena Sarin.

Read more about Helena Sarin here and an article she wrote about accessibility of AI Art practice. https://bit.ly/2FIJLpk (#neuralBricolage: An Independent Artist’s Guide to AI Artwork That Doesn’t Require a Fortune)

Left: city, rain, solitude… GAN trained on cityscape photography. Right : The Gate Keeper. Images courtesy Helena Sarin.

Tom White and “Ways of Seeing”. (New Zeland)

Tom is a researcher in computational design at Wellingdon University. His recent AI artworks arise from an attempt to understand the differences between human visual perception, which relies on years of experience and billions of images seen and that of existing AI systems, more and more used around us: e.g. in self driven cars or for facial recognition. These systems are trained on finite series of images without understanding what they really are, but basically figuring out common traits. So then does a computer see the same object as you do, or see it the same way? When does it go wrong and why? Here are some examples of objects and what they are according to the “AI observer”. What would you see?

More of Tom’s artwork may be found here: http:drib.net/

Electric Fan (left) and Cello (right). The Cello painting was actually based on a computer perception of a Cello and the Musician. Images Courtesy Tom White.

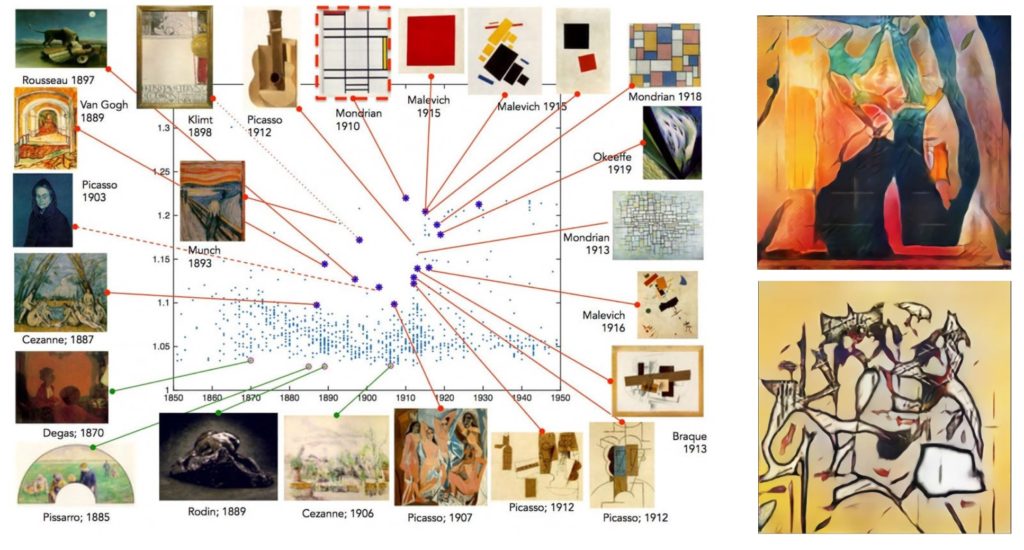

Work is done not only to get computers to draw but also to try and evaluate the significance of art works. AICAN by Ahmed Elgammal (USA) and colleagues is a computer program they say is based on their Creative Adversarial Networks (CANs) approach a modification of the Generative Adversarial Network (GAN) one. CAN is designed to generate work that does not fit known artistic styles, thus “maximizing deviation from established styles and minimizing deviation from art distribution.” CAN paints and gives critical art assessments. It was given over 80,000 digitized images of Western paintings from the 15th to the 20th century. The paintings were arranged historically relating them to their era. The algorithm was trained to look for paintings from the point of view of originality and their influence in history. Using this approach the program has been made to evaluate a number of artworks and came up with an assessment not too different from the prevailing ideas in the art world (see image). CAN also paints with its degree of “stylistic ambiguity” and “deviations from style norms” that characterizes the algorithm. In Elgammal’s words they push their algorithm to: “emphasize the most important arousal-raising properties for aesthetics: novelty, surprisingness, complexity, and puzzlingness.” What is impressive is that when its works, along with works of Abstract Expressionist and non-figurative work from Art Basel in 2016, were shown to people, less than 50% concluded that the CAN work was by a computer and also its work was liked by many people almost more than works of human artists.

AICAN ranking of paintings according to their creativity(left) and a CAN paintings (right). Courtesy: AICAN, Ahmed Elgammal, The Art & AI Lab at Rutgers University.

OTHER CREATORS.

Check out also Newsletters on AI art like the Creative AI Newsletter, by Luba Elliott and various contests like the Robotics Art Contest for paintings.

- Harshit Agrawal, India

- Memo Akten, Istanbul.

- Albert Barqué-Duran, London, Berlin & Barcelona.

- Robbie Barrat, US

- Anil Bawa-Cavia, Berlin & London.

- Harald Cohen US

- Ian Cheng, NYC.

- Sougwen Chung, NYC.

- Simon Colton, UK

- Sofia Crespo, Berlin.

- Hannah Davis, NYC.

- Heather Dewey-Hagborg, Chicago & NYC.

- Oliver Deussen, Germany

- Justine Emard, France

- Rebecca Fiebrink, London.

- Ross Goodwin, Los Angeles.

- Mario Klingemann, Munich.

- Gene Kogan, Berlin.

- Egor Kraft Moscow, Berlin, Russia

- Jon McCormack, Australia

- Kyle McDonald, Los Angeles

- Dmitry Morozov aka ::vtol::,Russia

- Erik Matrai, Hungary

- Simone C. Niquille, Amsterdam.

- Helena Nikonole, Moscow

- Andreas Refsgaard, Copenhagen.

- Tivon Rice, Seattle & The Hague.

- Anna Ridler, London.

- Helena Sarin,

- Philipp Schmitt, NYC.

- Yining Shi, NYC.

- Shinseungback Kimyonghun (Shin Seung Back & Kim Yong Hun), Seoul.

- Xavier Snelgrove, Toronto.

- Cassie Tarakajian, Brooklyn, NY.

- Francis Tseng, NY

- Michael Tyka, Seattle.

- Cristóbal Valenzuela, NYC.

- Tom White, Wellington.

- Samim Winiger, Berlin.

- ART GROUP «WHERE DOGS RUN» (Olga Inozemtseva, Alexey Korzukhin, Vladislav Bulatov, and Natalya Grekhova), Russia